The technicians of the CREDITS blockchain platform have completed testing of the system’s Alpha version. The goal was to check its maximum load and resistance to processing large volumes of transactions, and to identify and resolve problems.

‘At the peak load the system could process a pool (block) of 488 403 transactions per second. We reworked the entire big data storage architecture, API, and some of the individual platform components during testing. The video of the testing procedure is posted on our YouTube channel.’ says Eugeniy Butyaev CTO at CREDITS.

How the testing was done?

The platform node was deployed on a virtual machine in Microsoft Hyper-V for x64-based systems. The hardware specifications were the following: Intel® Xeon® E5–2630, 15 MB cache, 2.30 GHz, 7.20 GT/s Intel® QPI, Turbo boost of 2.80 GHz, 8 GB RAM.

The virtual platform configuration involved the creation of 29 nodes and 1 signaling server with the processor speed from 2.2 to 2.8 GHz.

A robot-generator of transactions was developed to carry out the testing. It was running according to the following principle: at every iteration (robots formed a pool of transactions and sent large pools of transactions per second to the previously set time limit) random transactions went into the system as fast as possible, and this process was cyclical. Client_6300.bat and TransactionSendler.exe were run on each server, and only a screen on one server. All this has allowed creating conditions that simulate a large number of transactions processed per second.

Technical implementation of the test:

The main objective was to test the ability of the network architecture to manage and process large volumes of transactions. That was the asynchronous transaction processing by all nodes on the network due to multi-threading operations. Multi-threading, in its turn, was implemented only at the stages of formation and processing of a transaction. Simultaneous recording of several transactions or reading and writing of transactions into the database was made in the order queue.

In total run, the test was accomplished about 30 times and consistently went from 300 to 500 thousand transactions per second, due to the fact that such a number of transactions occupies up to 385 MB of memory (500 thousand transactions per second). The transaction has a maximum size that is reserved in the database, i.e. up to 808 bytes depending on the balance, the length of the address, the transaction currency, availability of digital signature, etc. We have decided to cut the transaction to 120–150 bytes in order to simplify testing. Otherwise, we would have had to deploy very powerful nodes with a high network bandwidth and a large storage. For example, for 1 hour at 500 tp/s the volume would amount to 1,387 TB.

Later, as the Credits network will be gaining computing power, this problem will be solved by the owners of nodes as well as by the built-in archiving system to store data.

The absence of EDS.

We were tasked to test the load on a stable version of the platform. EDS requires additional processing time and adds 64 bytes to the volume of one transaction. The function is implemented in later versions of the platform with the use of technology ЕВ25519, which will be detailed later in the article “THE SECURITY TECHNOLOGIES OF CREDITS PLATFORM”. At this moment, it is in the testing stage. Further, the use of this technology can increase the transaction processing time by 8–10%

Validation of nodes. We refused from the validation algorithm DPOS and implemented a stable version of the validation algorithm BFT. During the testing phase, it was decided to use a stripped-down but stable version of this protocol. At the current stage, a stable version of the algorithm works as follows: the newly-created transaction is sent via trusted nodes; the number of trusted nodes varies (with the total number of 10 nodes 50% are trusted ones, but not less than 3, with the total number of nodes from 100, 10% are trusted ones), if 51% of trusted nodes approve the transaction, it is sent to the master node for further development of the pool, and recorded in the database. The checking of transaction uniqueness was performed without checking the account balance. It affects the speed only to inconsiderable ~1–2%, but we would have to create a lot of different accounts.

The following issues were also absent as not required for testing the system bandwidth:

– Charging of fees for processing to the main and trusted nodes;

– Processing related to smart contracts;

The nodes were started one by one. At the same time, the network load was monitored.

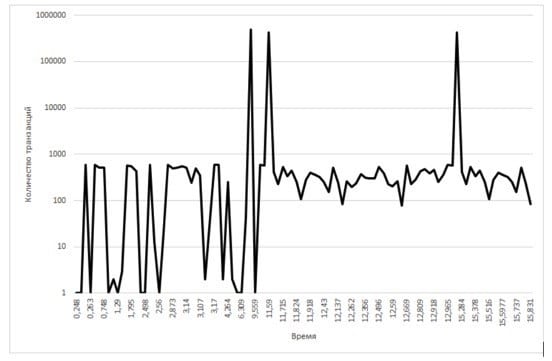

You can see its changes in the following graph:

As it is seen, an average value ranged from 100 to 1000 transactions per unit of time. As the nodes were started, the generation of pools was done with a much larger set of transactions. At the peak load, the system has processed the pools with a volume of up to 488 403 transactions per second. Both maximum and average values may indicate a very high processing speed in the network.

What problems were discovered and how they were solved?

The main problem in increasing the speed of processing lies in bypassing the physical limitations of a huge number of hardware configurations, applied technologies of processing and transmission of data, a network bandwidth specified by different providers. All of these and many other factors lead to serious difficulties in optimizing the platform performance. Rather weak equipment was used during the testing phase, and many features have been eliminated due to physical limitations. Subsequently, as the network keeps on the development, the network equipment will be adjusted and optimized for the required tasks as well as for the volume of payment transactions and smart contracts.

We will make two logical conclusions on the results of the testing: the speed of processing and recording of transactions is very low if the hardware configurations are weak. The speed is also reduced as the load on the network increases. The environment always greatly affects the network bandwidth, and the same applies to the network load. The rule is always the same regardless of the complexity of the system.

A number of other and more serious problems demanded a global review and change of the architecture for a big data storage.

One of the difficulties was the fact that the transaction generator was waiting for a response from the network connector while sending packets. This greatly hindered the operation of the system. We decided to remove the response request from the connector. However, this step increased the risk of data loss during the synchronization. At the same time, there emerged some problems with the data transfer protocol: the maximum packet datagram of 65,535 bytes for the UDP Protocol did not permit transmitting larger packets. Packet sizes were restricted to the maximum size of the datagram to solve both of these problems, i.e. reducing the loss of data during transmission and increasing the speed of processing.

Another difficulty was the lack of data multi-threading in the node core: the data was lost while simultaneously processing several transactions. As a solution, we chose the principle of transaction processing turn by turn when their number increases to a limiting value.

‘When the stream of transactions from the generator became constant and continuous, there appeared a queue of data for reading/writing through the API. The data flow channels were overloaded. Due to the use of the same data flow, the screen did not show relevant information.’ says Eugeniy Butyaev, CTO, on Twitter; ‘We changed completely the architecture for a big data storage and API to solve this problem.’

Conclusions & test results.

The data obtained during these tests represent a great value for the blockchain community and our project. The test helps to assess the real allowable load on the network, which should be considered when anticipating a large flow of operations.

First, we have proved that our system can handle large transaction volumes from 500,000 per second, with the prospects of millions of transactions. We are proving the feasibility of processing millions of transactions per second with an average processing time from fractions of a second.

It should be understood that the programming code is not optimized and will later have a huge potential for improvement and optimization.

The average minimum time of passing a transaction record into the database is 1.302 microseconds ( 10 –6 sec) (transfer between nodes, processing and preservation in the storage). Another report analyzing the speed of transactions will be issued soon.

Monitoring and analysis of the results helps to detect existing and potential problems. During our test, we naturally encountered many difficulties that required further work to improve the system.

At the present moment, the technical team of CREDITS is represented by more than 70 people who work on the project, issuing releases and improving the platform code.